Answer the question

In order to leave comments, you need to log in

What is the correct way to install Ubuntu on RAID1 in SuperServer 1028R-WTRT?

Good day to all. Ran into a problem while installing Ubuntu on a SuperServer 1028R-WTRT. In principle, two working solutions have already been found, but I would like to ask the community for advice - how is it correct and optimal. There is a SuperServer 1028R-WTRT platform with MB X10DRW-iT . The task is to configure RAID1 (mirror) using the built-in C610/X99 series chipset sSATA controller in RAID MODE, or soft RAID in Ubuntu itself (mdadm). The problem is the following, if you use disk auto-partitioning in both cases, we get either a black screen with a cursor after installation, or a drop in grub / grub rescue, depending on the installation option. Something similar has already been described here - How to run Ubuntu Server with Supermicro Intel Raid 10? .

Through various experiments, two working solutions were found:

Solution 1.

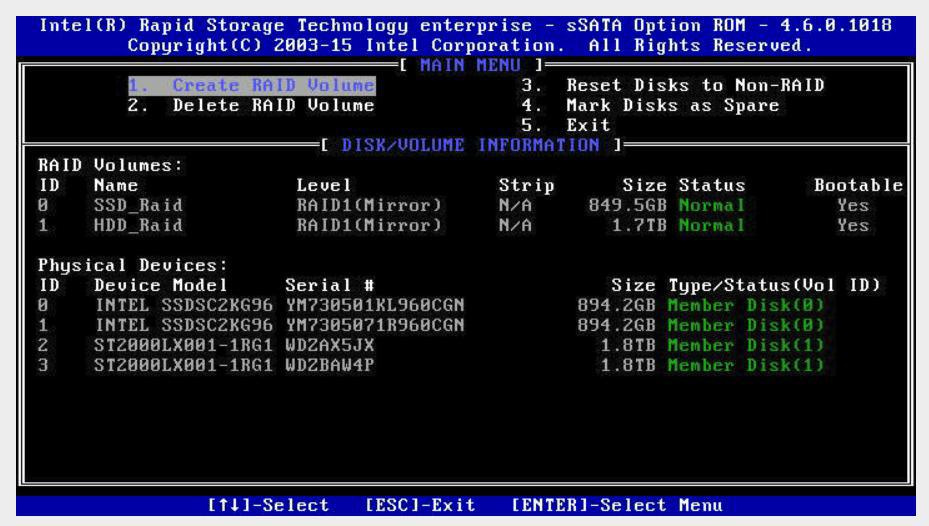

1. Create a RAID1 array using the controller:

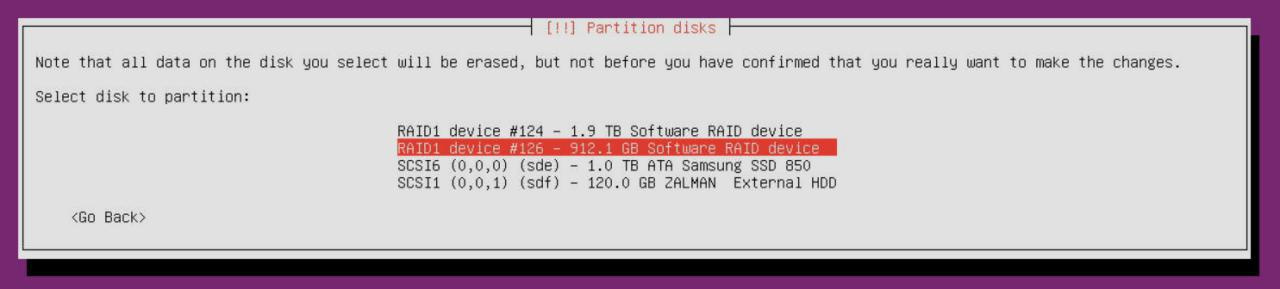

2. Use boot to UEFI. When installing Ubuntu, the RAID appears as a separate device:

3. Partition the device manually by creating a single ext4 partition with a mount point / . Those. without ESP and swap partitions.

After that everything loads successfully. If you let the installer mark the device on their own, i.e. with the creation of ESP, ext4 (/) and swap - then after reboot we get a blinking cursor, as described here. The decision not to place swap on a RAID is peeped there. The presented option is working, but I would like to hear the opinion of All, is such a configuration viable without swap on a production server? Or is it still worth creating swap later in the form of a swap file in an already running system? The server has 96 Gb RAM.

Solution 2:

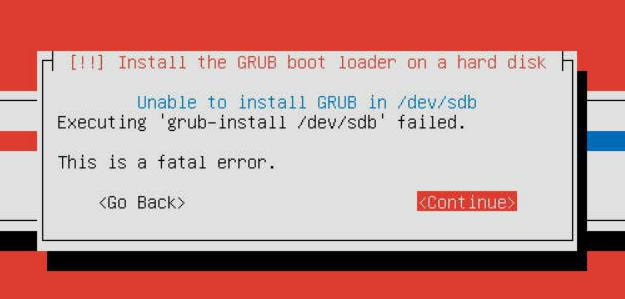

I had to fiddle around with soft RAID in Ubuntu. Tried various boot options in both UEFI and Legacy BIOS. Basically, the problem boils down to the inability to install GRUB at the installation stage, or again with the inability to boot from the resulting RAID:

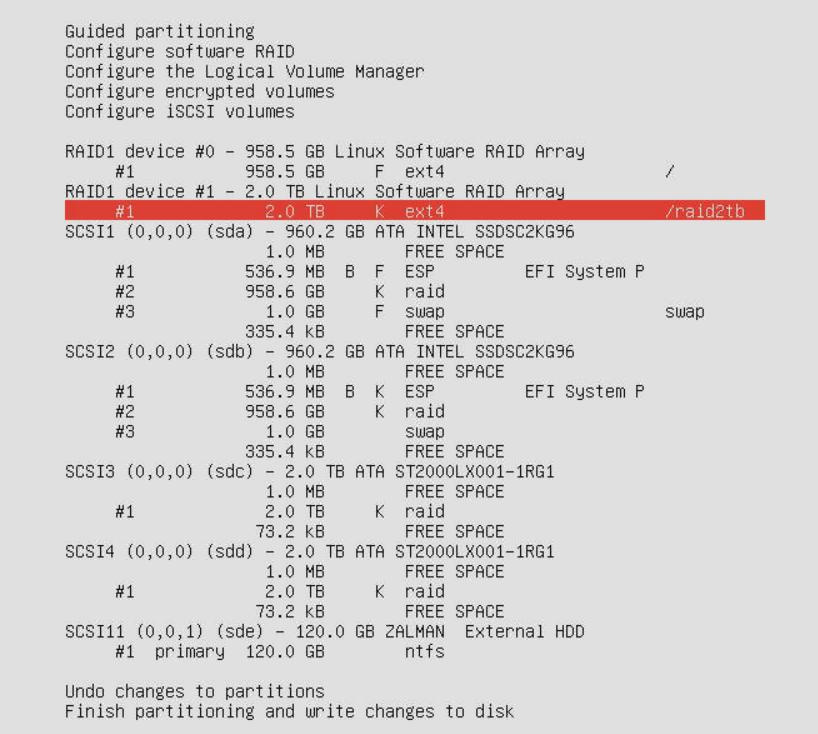

By hook or by crook, the following layout was found with soft RAID (tested for UEFI):

Those. first, we mark both disks as usual, with the creation of ESP, ext4 (/) and swap. This all happens after a few reboots, then we change the partition type from ext4 (/) to linux-raid on both drives. Then we create a software RAID from two linux-raid partitions and it already has an ext4 (/) partition. After that, grub is installed normally and it normally boots from one disk (in order to boot from the second - it's clear that you just need to tilt ESP to another disk). The option is working, but again it seems not particularly correct.

In this connection, the question is - maybe someone has a similar platform in operation. Which option would you use, described in solution #1 or #2? Perhaps you have some kind of working configuration that will be more "competent" and "fail safe". I will be glad to any advice and suggestions.

ps By the way, if you look at the OS Compatibility Chart on the SuperMicro website for X10DRW-iT (the MB used in this platform), then next to Ubuntu 16.04 LTS there is A2 - i-SATA/SATA (w/o RAID, AHCI mode).

Answer the question

In order to leave comments, you need to log in

The solution is actually very simple - to give up on hardware RAID, as practice shows that more problems arise from its operation:

1) in the event of a crooked driver update in the OS, you lose access to data

2) if the controller dies, you can remove the data, having a strictly similar controller with the same firmware version. There is no controller in the soft raid - there is nothing to die.

Install in AHCI mode with soft raid via mdadm.

In principle, such a controller should not be used as a raid controller - there are more problems than with a soft one, but there are no advantages - this is not some tricked-out raid controller with a powerful processor, a pack of memory and a battery, which can really give a noticeable increase in speed ...

Fuck the hardware raid. Look towards zfs, you can't imagine anything better in terms of convenience and reliability.

A raid is a rather complicated algorithm, much more than complicated than it seems, in fact it is not a purely hardware complex, but a software and hardware one, in fact it is a piece of hardware with software. which in turn makes the raid. The speed of inexpensive raid solutions can be even worse than software ones, but they obviously add problems with flexibility and interchangeability. In general, I am for a soft raid. YES, there are times when it is better to use an iron one, but these are usually very expensive solutions, sometimes it is easier to add a couple of disks and increase the speed with this than to take an expensive controller.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question